Designing and Implementing a Microsoft Azure AI Solution v (AI-102)

Question 1

DRAG DROP –

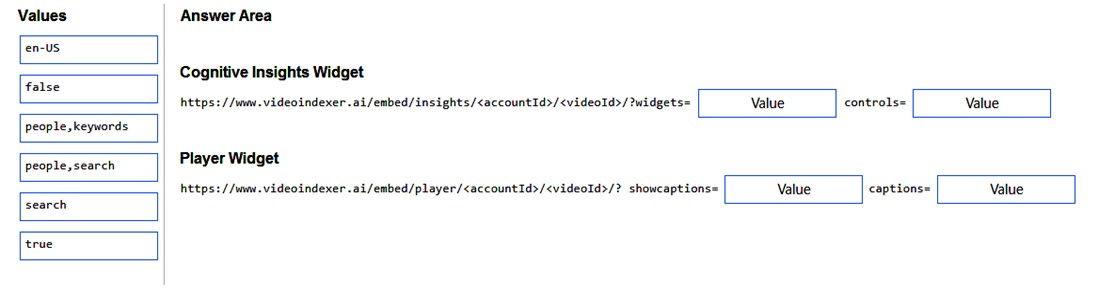

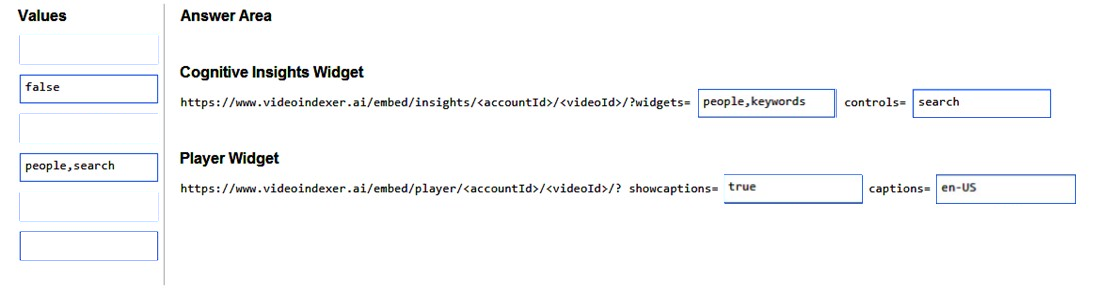

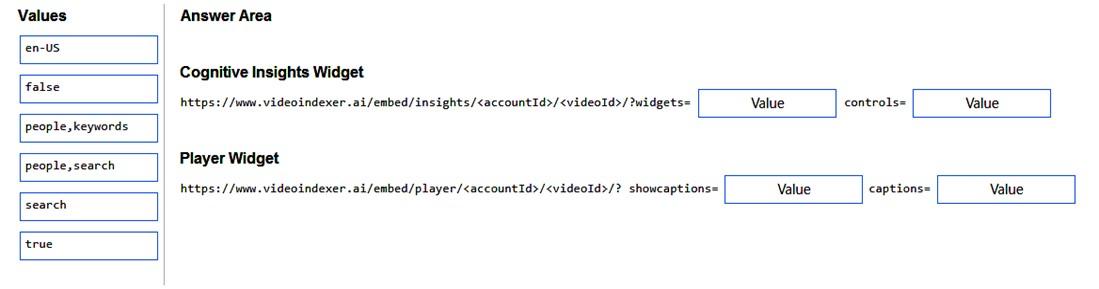

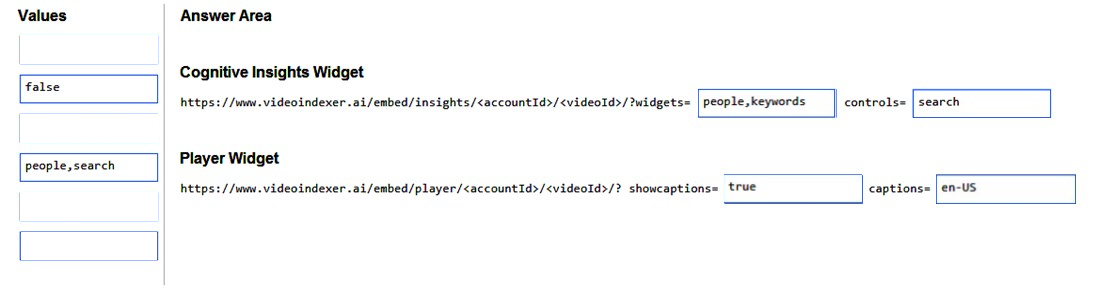

You are developing a webpage that will use the Azure Video Analyzer for Media (previously Video Indexer) service to display videos of internal company meetings.

You embed the Player widget and the Cognitive Insights widget into the page.

You need to configure the widgets to meet the following requirements:

✑ Ensure that users can search for keywords.

✑ Display the names and faces of people in the video.

✑ Show captions in the video in English (United States).

How should you complete the URL for each widget? To answer, drag the appropriate values to the correct targets. Each value may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Select and Place:

Answer:

Reference:

https://docs.microsoft.com/en-us/azure/azure-video-analyzer/video-analyzer-for-media-docs/video-indexer-embed-widgets

Question 2

DRAG DROP –

You train a Custom Vision model to identify a company’s products by using the Retail domain.

You plan to deploy the model as part of an app for Android phones.

You need to prepare the model for deployment.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Select and Place:

Answer:

Reference:

https://docs.microsoft.com/en-us/azure/cognitive-services/custom-vision-service/export-your-model

Question 3

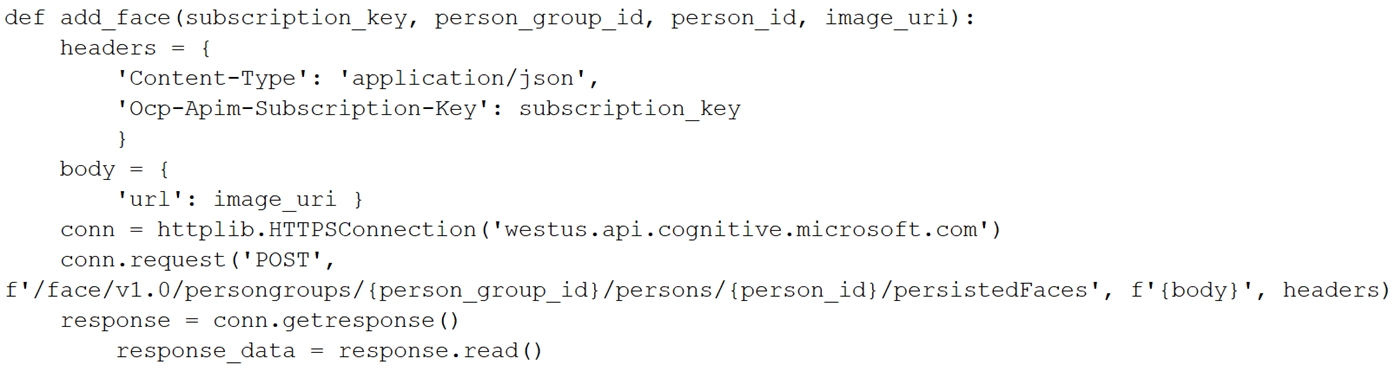

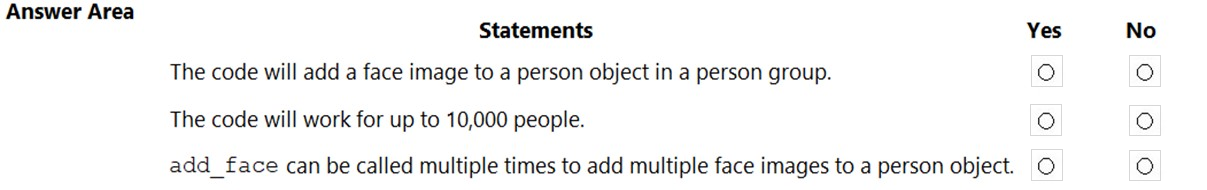

HOTSPOT –

You are developing an application to recognize employees’ faces by using the Face Recognition API. Images of the faces will be accessible from a URI endpoint.

The application has the following code.

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Reference:

https://docs.microsoft.com/en-us/azure/cognitive-services/face/face-api-how-to-topics/use-persondirectory

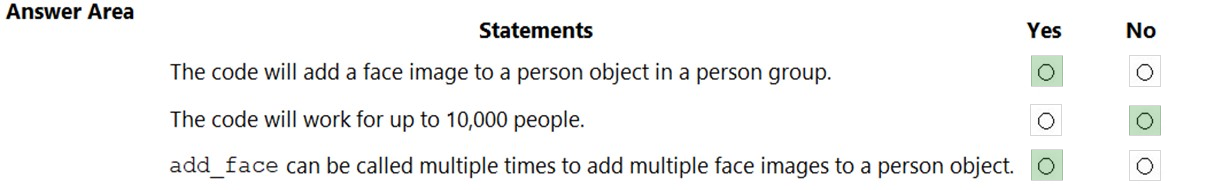

Question 4

DRAG DROP –

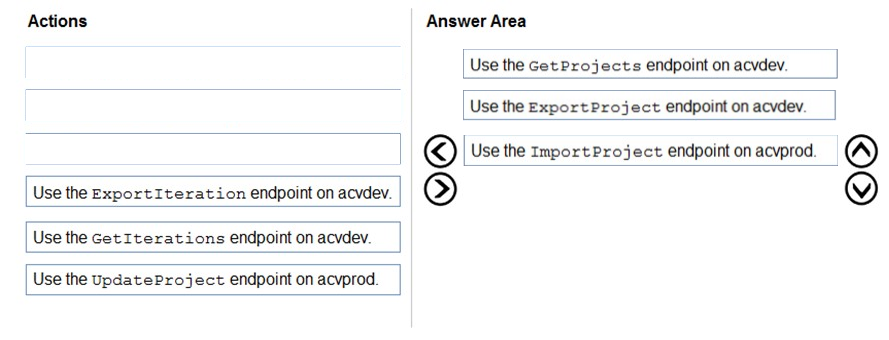

You have a Custom Vision resource named acvdev in a development environment.

You have a Custom Vision resource named acvprod in a production environment.

In acvdev, you build an object detection model named obj1 in a project named proj1.

You need to move obj1 to acvprod.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Select and Place:

Answer:

Question 5

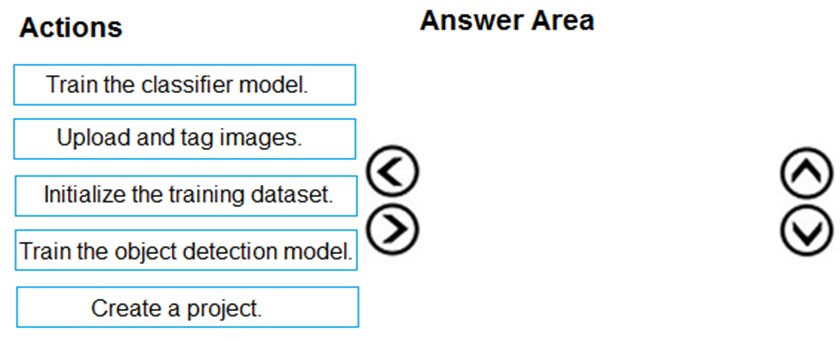

DRAG DROP –

You are developing an application that will recognize faults in components produced on a factory production line. The components are specific to your business.

You need to use the Custom Vision API to help detect common faults.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Select and Place:

Answer:

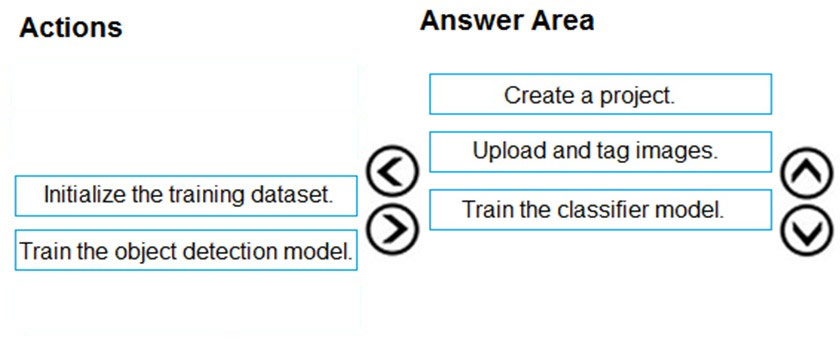

Step 1: Create a project –

Create a new project.

Step 2: Upload and tag the images

Choose training images. Then upload and tag the images.

Step 3: Train the classifier model.

Train the classifier –

Reference:

https://docs.microsoft.com/en-us/azure/cognitive-services/custom-vision-service/getting-started-build-a-classifier

Question 6

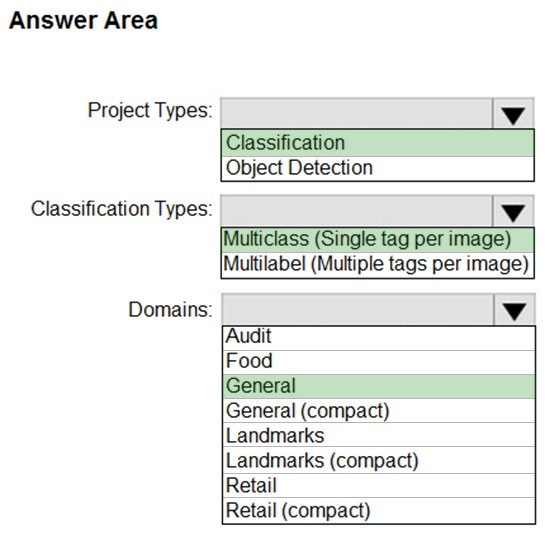

HOTSPOT –

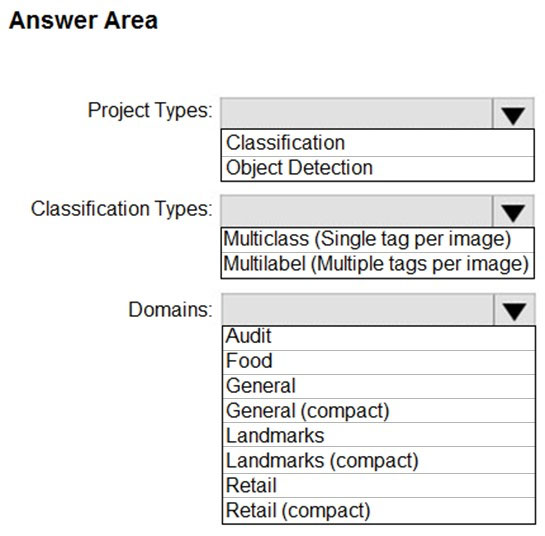

You are building a model that will be used in an iOS app.

You have images of cats and dogs. Each image contains either a cat or a dog.

You need to use the Custom Vision service to detect whether the images is of a cat or a dog.

How should you configure the project in the Custom Vision portal? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area

Answer:

Box 1: Classification –

Incorrect Answers:

An object detection project is for detecting which objects, if any, from a set of candidates are present in an image.

Box 2: Multiclass –

A multiclass classification project is for classifying images into a set of tags, or target labels. An image can be assigned to one tag only.

Incorrect Answers:

A multilabel classification project is similar, but each image can have multiple tags assigned to it.

Box 3: General –

General: Optimized for a broad range of image classification tasks. If none of the other specific domains are appropriate, or if you’re unsure of which domain to choose, select one of the General domains.

Reference:

https://cran.r-project.org/web/packages/AzureVision/vignettes/customvision.html

Question 7

You have an Azure Video Analyzer for Media (previously Video Indexer) service that is used to provide a search interface over company videos on your company’s website.

You need to be able to search for videos based on who is present in the video.

What should you do?

- A. Create a person model and associate the model to the videos.

- B. Create person objects and provide face images for each object.

- C. Invite the entire staff of the company to Video Indexer.

- D. Edit the faces in the videos.

- E. Upload names to a language model.

Answer : A

Video Indexer supports multiple Person models per account. Once a model is created, you can use it by providing the model ID of a specific Person model when uploading/indexing or reindexing a video. Training a new face for a video updates the specific custom model that the video was associated with.

Note: Video Indexer supports face detection and celebrity recognition for video content. The celebrity recognition feature covers about one million faces based on commonly requested data source such as IMDB, Wikipedia, and top LinkedIn influencers. Faces that aren’t recognized by the celebrity recognition feature are detected but left unnamed. Once you label a face with a name, the face and name get added to your account’s Person model. Video Indexer will then recognize this face in your future videos and past videos.

Reference:

https://docs.microsoft.com/en-us/azure/media-services/video-indexer/customize-person-model-with-api

Question 8:

You use the Custom Vision service to build a classifier.

After training is complete, you need to evaluate the classifier.

Which two metrics are available for review? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

- A. recall

- B. F-score

- C. weighted accuracy

- D. precision

- E. area under the curve (AUC)

Custom Vision provides three metrics regarding the performance of your model: precision, recall, and AP.

Reference:

https://www.tallan.com/blog/2020/05/19/azure-custom-vision/

| Page 1 |